· Zeros and Ones · Tutorials · 3 min read

Hand Gesture Recognition, Sign Language Translator

Dive into the intersection of Machine Learning, Web Development, and Image Processing to create a Hand Gesture Recognition-based Sign Language Translator.

Sign language is a vital communication tool for the deaf and hard-of-hearing communities. With advancements in technology, particularly in Machine Learning and Image Processing, we now have the potential to develop tools that can translate sign language gestures into text or speech in real-time. This blog explores the creation of a Hand Gesture Recognition system that serves as a Sign Language Translator, integrating key concepts from Machine Learning, Web Development, and Image Processing.

Understanding the Basics

Machine Learning in Gesture Recognition

Machine Learning plays a crucial role in recognizing hand gestures. By training models on datasets containing various hand gestures, the system learns to identify patterns and classify them accurately. Techniques like Convolutional Neural Networks (CNNs) are commonly used for this purpose, thanks to their ability to process and learn from images effectively.

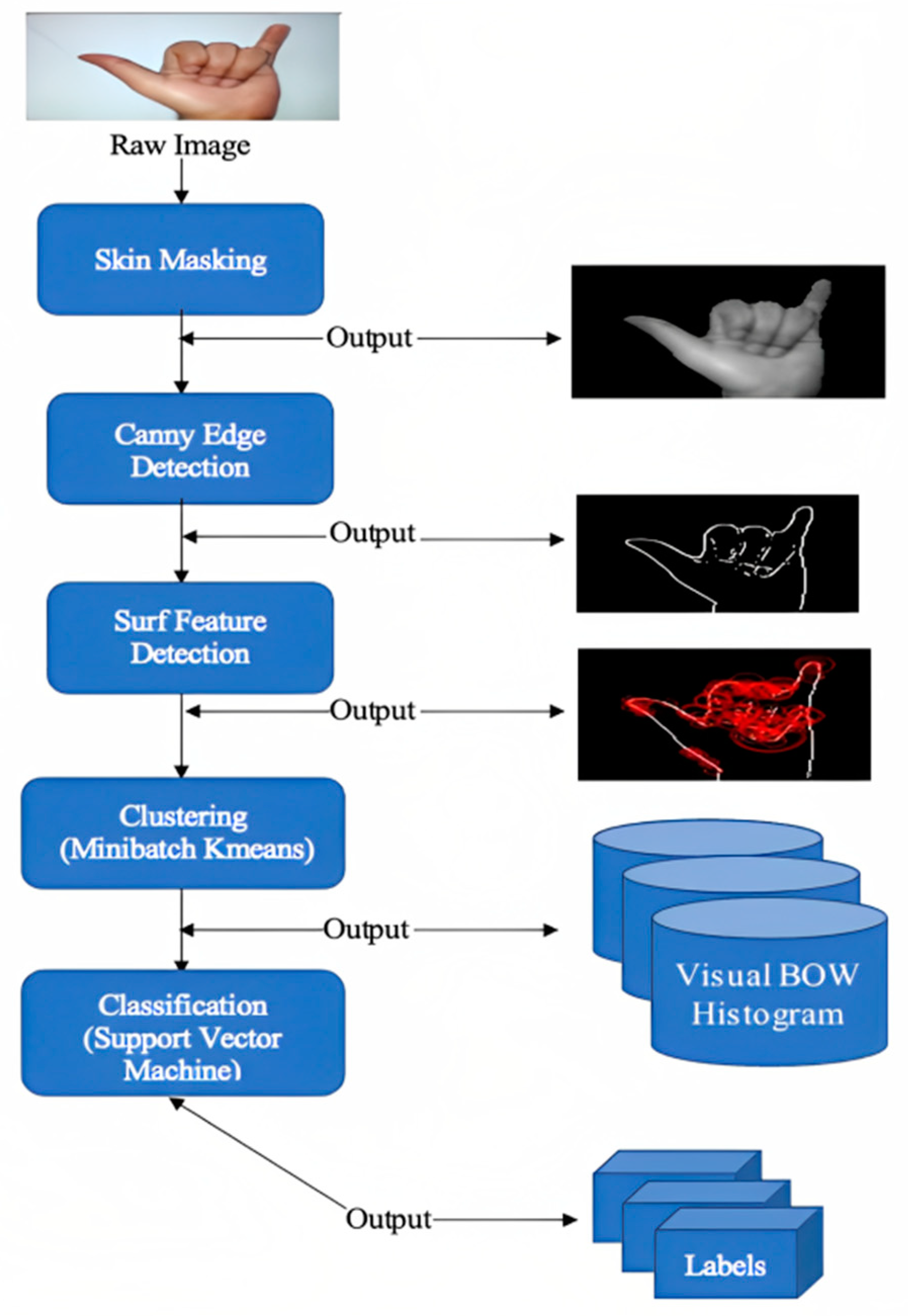

Example Image: A flowchart showing the process of training a CNN model for gesture recognition.

Image Processing Techniques

Image Processing is essential in pre-processing the hand gesture images. Techniques such as background subtraction, noise reduction, and contour detection are used to enhance the quality of images before they are fed into the Machine Learning model. This ensures higher accuracy in gesture recognition.

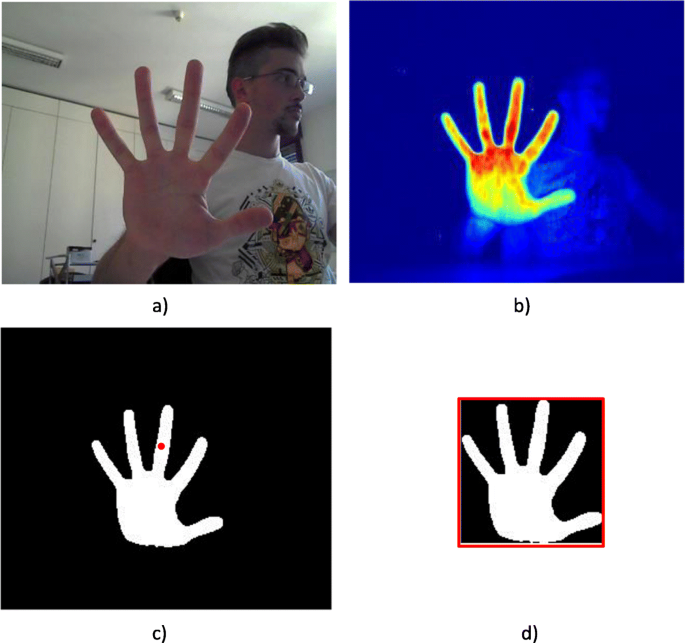

Example Image: An example of image processing steps, showing an original hand image, a pre-processed image, and the final segmented hand region.

Web Development for Real-Time Translation

Once the Machine Learning model is trained and tested, the next step is integrating it into a web application. Web Development frameworks like Flask or Django in Python can be used to build the backend, while modern frontend frameworks like React or Angular can be utilized to create a responsive and interactive user interface. This allows users to input their hand gestures via a webcam, with the application processing and translating them in real-time.

Example Image: A screenshot of a web application interface showing real-time gesture recognition and translation.

Building Your Own Sign Language Translator

Step 1: Dataset Collection and Preprocessing

Begin by collecting a dataset of hand gestures representing different sign language symbols. These can be images or video frames. Preprocess the images using the Image Processing techniques mentioned above.

Step 2: Model Training

Use a CNN model to train on your preprocessed dataset. You can use TensorFlow or PyTorch for this purpose. Ensure that the model is well-tuned for high accuracy and low latency, as this will be critical for real-time applications.

Step 3: Web Application Development

Develop the web application that will host the sign language translator. Set up the backend to handle model inference and the frontend to provide a user-friendly interface. Implement real-time gesture recognition using a webcam.

Step 4: Testing and Deployment

Test the application thoroughly with various hand gestures to ensure robustness. Once satisfied, deploy the application using cloud platforms like AWS or Heroku.

Conclusion

Creating a Hand Gesture Recognition-based Sign Language Translator is an exciting project that brings together the power of Machine Learning, Web Development, and Image Processing. It not only showcases the technical aspects of these domains but also contributes to making communication more inclusive. Start building your own translator today and make a difference in the way we interact with technology.